I'm continually trying to expand my machine learning skills. I realized that I hadn't really used Tensorflow in any meaningful way and I wanted to try an image classification project. There are some terrific projects to try out, but this one stood out in a few key ways: 1. The source of the data 2. Setting up multiple convolutional layers and 3. VGG16 is a new model to me (at the time of writing).

Setup

I chose image classification because I have experience working with the MNIST dataset of handwritten digits. I did that project with Matlab and I will re-do it with machine learning and python so I needed a little practice first.

Methodology

I take a set of images with known labels: mountain, street, glacier, buildings, sea, and forest. I need to shape the data a bit because I'm using a Convolutional Neural Network and Tensorflow to process the data. This means that I need to account for the size of the image, whether the images include color (RGB), and I need to track the datatypes as I go.

I also considered some advice from a bonafide ML Engineer: to make sure to showcase what stakeholders want to see. It's one thing to know how to do this, it's quite another to explain it with the appropriate visuals.

Now for the model. I originally started with the example from a few different sources but quickly decided to add some additional layers to the model to see what might happen. The model I ended up with:

There are nine layers. The first convolutional layer has 32 filters, each with size 3x3. This is the tractor-trailer window. I used a pretty standard ReLU activation for all layers. I took the input shape that was defined during preprocessing in and added a MaxPooling layer that downsamples the output by 2x2. The next few layers are the same and I bumped up the final filter to 64. The last few layers are flattening layer, a dense layer and a final dense layer. The second-to-last layer has 128 neurons and the final layer has 6 neurons. The last layer also uses the softmax activation to produce a probability distribution over the classes.

Results

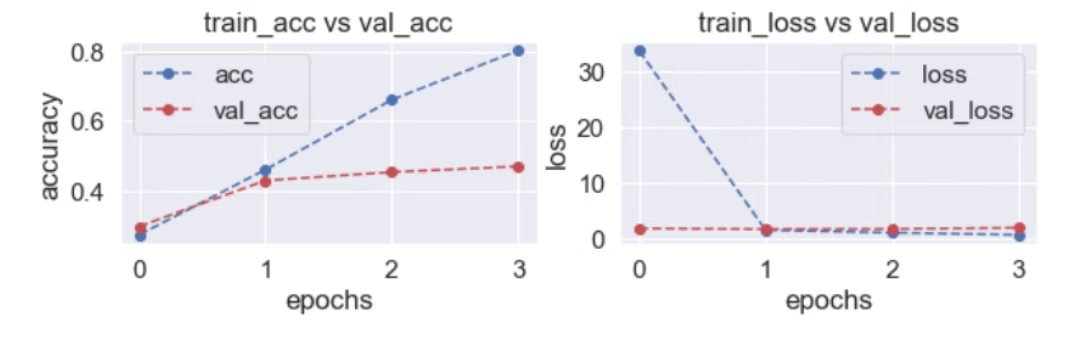

The results were compelling and it's clear I have some more exploration to do. The first pass, before I added a few layers:

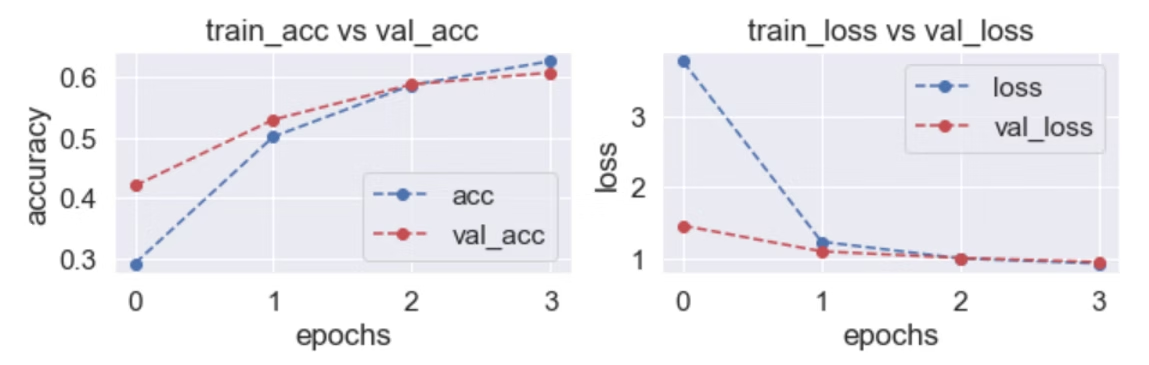

Initial results showing accuracy and validation curves crossing over at less than one epoch

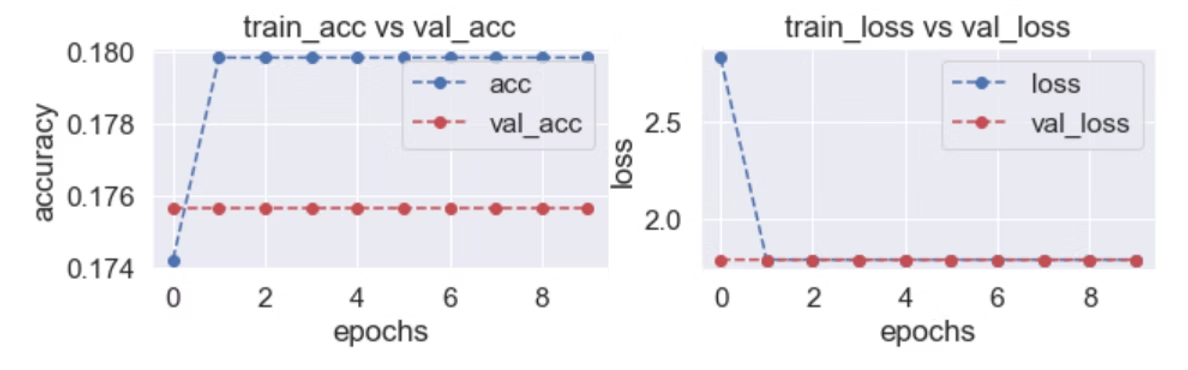

Out of the gate the accuracy and validation curves crossover at less than one epoch. Yikes. I added some layers and got the following results:

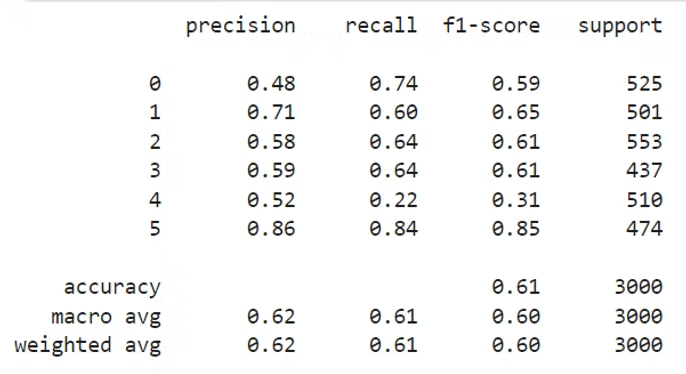

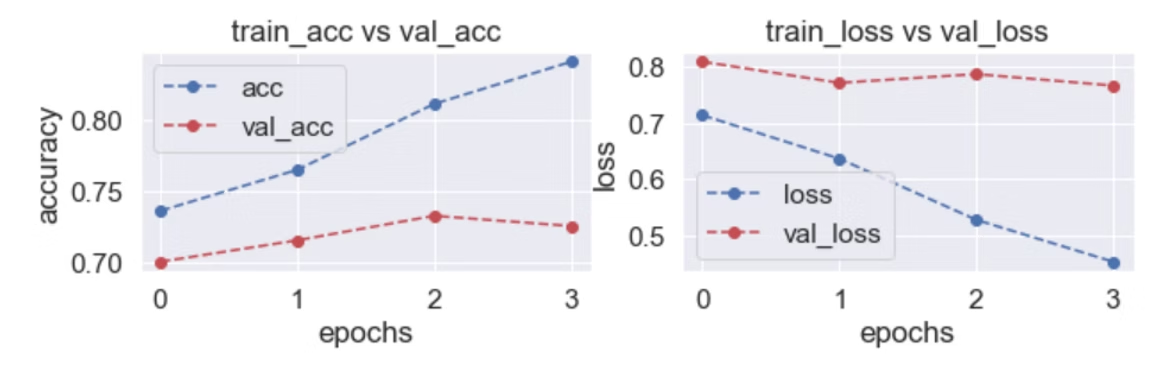

Much better results after adding additional layers - more epochs and better overall accuracy

This is much better in terms of epochs and accuracy overall. It aligns with what I would expect in dealing with a dataset the first time - that the results would fluctuate. For a small enough project, some directional improvements can be made and are made in this case. More layers = slightly better results. The real world implications are still pretty far away though... more layers may not be practical and there are likely other things that make more sense to change. The next table helps illustrate that last point:

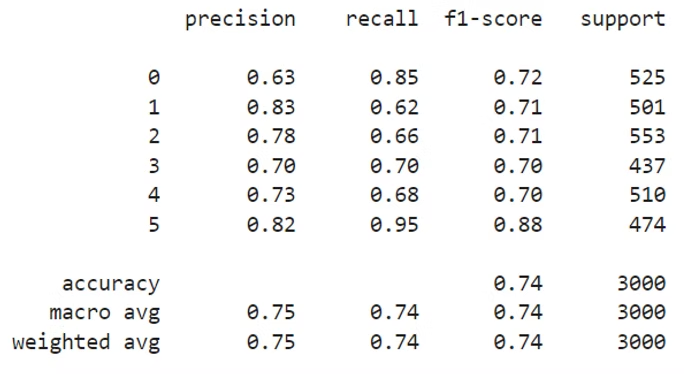

Results after adding image rotation - precision and recall both improve nicely

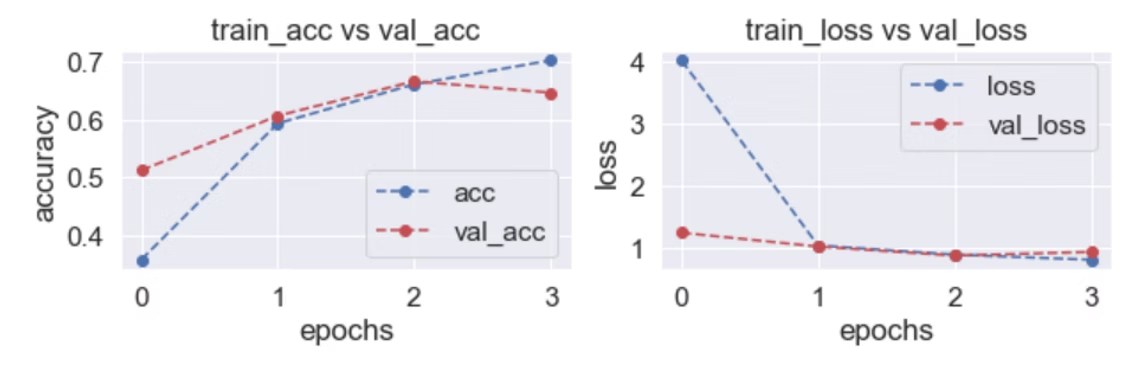

This time I rotated the images to see what impact that might have. While there is some explaining to do with the above curves, the precision and recall both improve nicely. The next thing I wanted to try was to change the scanning window. The 3x3 bit above in the model was changed to 5x5 for fun:

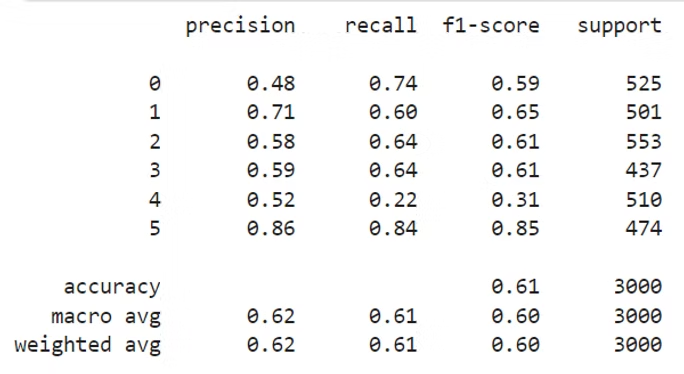

Very interesting results with 5x5 filters - more "natural" curves with crossover extending to second epoch

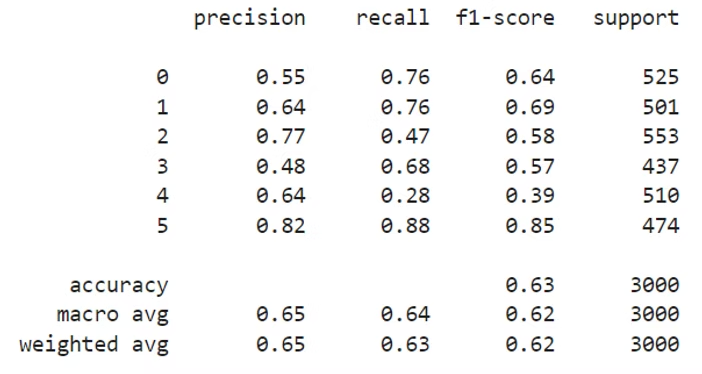

Very interesting! The curves look a little more like what I would expect with the crossover point extending to the second epoch but the precision and recall align pretty well with the original run of the data with less layers. The last thing I wanted to try was applying the VGG16 model. This model was implemented with very little optimization of tuning parameters and produced the following chart:

VGG16 implementation results - still a work in progress

This last piece is still a work in progress. LOL.