I'm a huge fan of ByteByteGo and a few weeks ago there was an example that took public quarterly reports from Google's parent company, tokenized them, vectorized/embedded them, added them to a database, then used a LLM to interact with it. There are lots of other examples of then taking the same model and having the chain perform a task.

Real-World Application Potential

Honestly, I was so excited to start this project because I can think of several ways to deploy this. Using our own data to generate insights, but then potentially triggering decisions or a cascade of decisions seems like an intriguing value proposition.

Setup

The reference above to ByteByteGo was the main driver for this, but I had to learn quite a bit about vector databases, encoding, and API keys. These aren't difficult subjects but the combination and nuance to some of the elements left me a bit frustrated at times. There isn't too much code at all, so the focus for me was on the understanding of each step and how the external bits work together to create the chain.

A key challenge for me was determining whether or not I wanted to pay for a small amount of OpenAI data. There are other ways to do this, but I hadn't yet had a chance to play with sizing a project to protect my wallet, so I dove in.

Methodology

The example takes public quarterly reports from Google and saves them all locally as .pdfs. I had to upgrade my Python version from 3.8 to 3.8.1 to make all of the bits work together, but that's fairly straightforward as long as you don't accidentally load the 32-bit version of 3.8.1 and wonder what in the heck is going on. The cool kids all use virtual environments to do these kinds of things, so I'll be setting that up after this project.

With the .pdfs downloaded, I load them all into a "loader" object that then gets split up into chunks. Yes chunks. I like that name - it's utilitarian and its functional. This turned out to be a key element for me because considering the free tier of OpenAI meant that I had to think through optimization (fortunately later in the project and I was able to progress up to that point, but regardless...) choices. This is the first one. The chunk size ultimately impacts how many vectors are loaded into the database AND how large each vector is. We'll come back to this.

I tried several sizes: 500, 1000, 2000, and 19880. That last one is a bit random but I was working with some datasheets that keyed me in on the number 60 as the number of requests per minute I wanted to achieve. I was going to do this by batching in nice round increments of something, and the way it worked out was my chunk size had to be 19880. Funny story though - if you're going to pay for data, none of that mattered. I spent $0.28 in the end, so luckily it was trivial. Still a worthwhile exercise when considering deployment models in different scenarios.

Cost Analysis Experiment

The next two steps were to 1. Obtain a Pinecone index (and API keys) and 2. Obtain OpenAI API keys. Pinecone is totally free and this process was easy. OpenAI can be free with careful optimization, but in the end I chose to pay a bit (for the record, I did try other encoders - Universal Sentence Encoder, SentencePiece, and a few others). With the keys sorted out, I explored the data a bit. A list of data chunks is generated and they need to be strings for further processing.

I then went down a rabbit hole to explore the string types, how to check for strings, and how to convert these if I needed to. Once I encoded things with "cl100k_base" and "text-embedding-ada-02", the data was even more interesting to explore. I compared the length of the encoder results to the original chunked document, found the index and verified all was correct. Lots of opportunities for functions in there.

I then did some calculations to try and get a feel for how much this process would cost me. At the aforementioned chunk size of 19880, I calculated a cost of $0.36. When the chunk size was 500, the cost went up to $7.53. All of this information is found on OpenAI's superb documentation pages.

The next step was to use OpenAIEmbeddings to embed the data, line up API keys, and send it! I ultimately decided to include a batch size of 50 to try to optimize the load on the OpenAI end, and that was the trick in the end. Fortunately I came in under my expected cost and I know there is more optimization to do that would push the cost even lower. For these purposes though, I'm ok with under $0.30.

I then tried a cosine similarity search and returned data successfully.

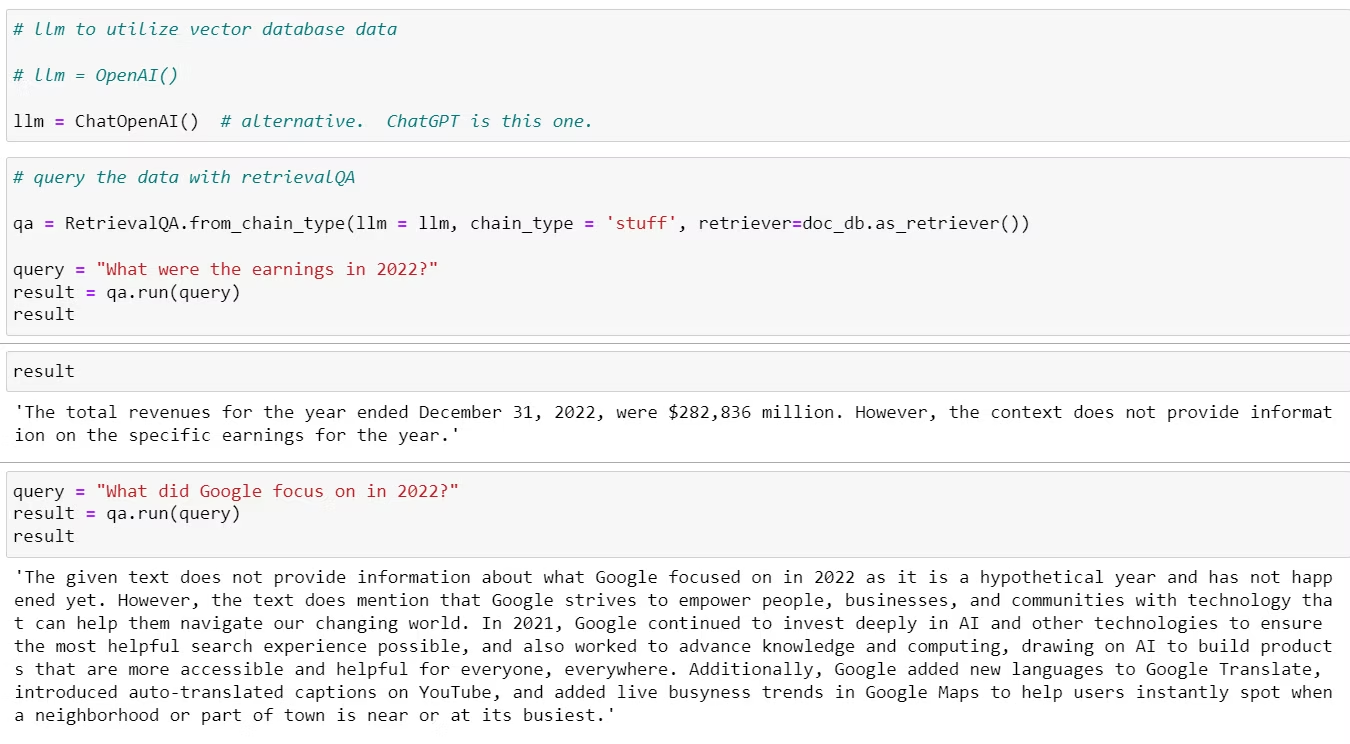

Next up was the LLM integration. ChatGPT is called by setting the llm parameter to "ChatOpenAI()", which is a better way to go than "OpenAI()" all by itself. Next up was simply constructing the query with RetrievalQA to both pose the query and run the result.

Results

I was so excited when this started working that I didn't even care that the result was "I don't know", an artifact of setting llm = OpenAI() instead of how I described above. This simple adjustment yielded:

Success! The LangChain system working with Google quarterly reports

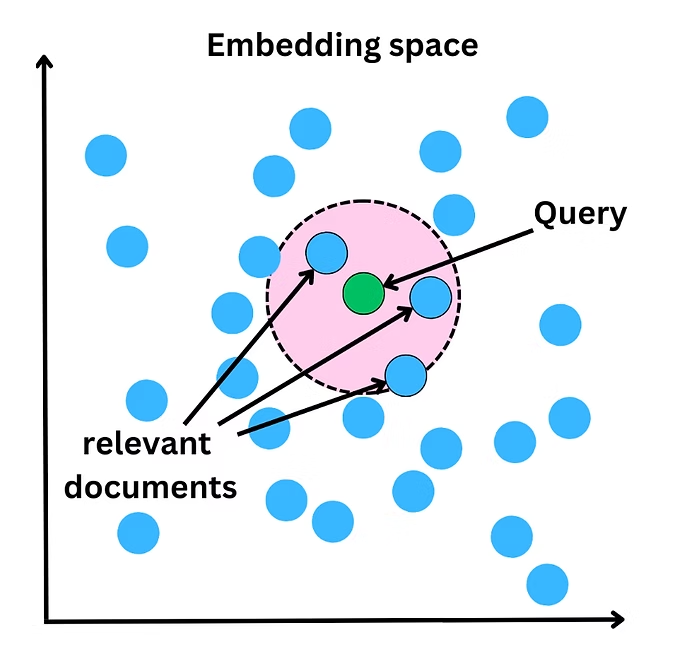

The working principle is the cosine similarity searched for the query among the vectors in Pinecone. From the BBG example directly:

Visualization of how the vector embedding space works for similarity search

What's really neat to me is that this isn't all there is to the chain. From here, one can send an email, trigger an action, update data, or make an informed decision, which is what I suppose this is really all about.

I am going to try some of these options, likely by calling a Python instance and performing some actions automatically. In my view, there is an appreciable amount of potential to apply this to real-world scenarios.

Project Success

In the end I am delighted to have had a reason to generate my own private keys, pay a little money to get a thing done, and to have learned an incredible amount of new information about a tool that I just read about in an email.