This is a Machine Learning project done in Matlab. It's a classic handwritten digit recognition problem using the MNIST database.

Setup

The major tasks with this project was to build a Naive Bayes Classifier model, train a dataset, and apply the model to a holdout set of data. Once the supplied data was consumed, we were to submit our own handwritten digits to be evaluated by the model.

Project Objectives

• Build a Naive Bayes Classifier from scratch

• Train the model on the MNIST training dataset

• Test on holdout data to evaluate performance

• Submit custom handwritten digits for classification

Methodology

Feature Engineering

To develop the classifier, single pixels were used as features. There are alternative ways to do this, but using each pixel as a feature seemed to allow for straight-forward application of the model to the training and test sets. For the training set, each image was read into Matlab and each pixel was given a 1 if it was above a threshold of 128 and a 0 if it was below. This halfway point between 0 and 255 was selected somewhat arbitrarily, and it was used as a starting point. With the images now converted to an array of 1s and 0s, a vector was then created for each image.

Probability Calculation

The probability of each image was calculated, then each vector was combined into a 784 x 60000 matrix and the label for each was determined by identifying the column index of the corresponding label file and applying it to the image matrix. The vectors were then grouped according to label. A probability matrix was generated by summing the number of ones in each vector row, then dividing by the total number of images for that label, resulting in a 784 x 10 matrix. Lastly, a Laplacian smoothing factor was applied to the probability matrix to ensure no zeros existed.

The probability was calculated by comparing each test image to each column in the probability matrix. Each test image was selected and put into binary vector format. The probability was calculated by multiplying together the values from each column of the probability matrix in a specific pattern. This is shown by the equation below, where PMV = probability matrix value:

Probability calculation formula for Naive Bayes classification

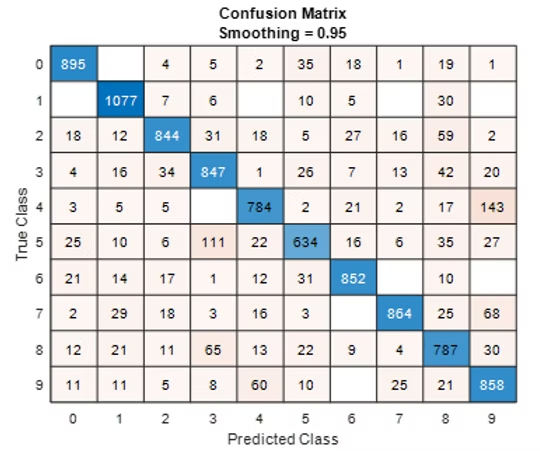

Repeating this for all labels yielded a 1 x 10 matrix, accounting for all possible outcomes. A logic check for the highest value was implemented, followed by a rule to accept the first highest value to determine the predicted label. An example of the confusion matrix generated for the test data is shown below:

Confusion matrix showing classification performance across all digits

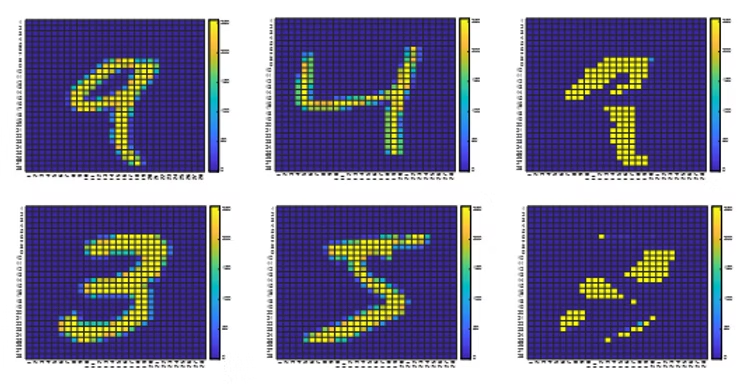

I really like confusion matrices because of their simplicity. It's clear there is trouble with 4/9 and 5/3, and this makes sense given just how similar these number pairs are. Taking a sample of each of these pairs and comparing it to the odds ratio for the combination is shown below:

Sample digits showing common misclassification pairs (4/9 and 5/3)

Results

The model prediction accuracy was 84.6% with optimized smoothing and binary threshold values. Not super great results, but on-par with industry standard for a Bayesian classifier without any other bells and whistles. Accuracy improves a bit when the smoothing factor is changed, and further improvements can be realized when the threshold is lowered (slightly). I suspect that a unique smoothing factor per digit would further improve the results seen here.

Performance Summary

• Final accuracy: 84.6%

• Binary threshold: 128 (0-255 scale)

• Laplacian smoothing applied

• Common misclassifications: 4/9 and 5/3 pairs

• Performance comparable to standard Naive Bayes implementations

Analysis & Insights

In all, the Naive Bayes classifier seems to be a reasonable starting point in classifying these kinds of images under these conditions. It would be interesting to do this project again with groups of pixels instead of single pixels to see if distinguishing characteristics can be drawn from the data that way. Other classifiers would be interesting to implement as well.

In all, a great way to see the underpinnings of the Naive Bayes classifier outside of a Python module!

Future Improvements

Several potential enhancements could improve the classifier's performance:

• Individual smoothing factors per digit class rather than a global factor

• Feature extraction using groups of pixels instead of single pixels

• Different threshold values optimized per digit

• Implementation of other classification algorithms for comparison

• Data augmentation techniques to expand the training set

Lessons Learned

This project provided valuable hands-on experience with the fundamentals of machine learning classification. Building the Naive Bayes classifier from scratch in MATLAB gave deep insights into how probability-based classification works at its core.

The confusion matrix analysis revealed the intuitive challenge that even humans face - distinguishing between visually similar digits like 4/9 and 5/3. This reinforced the importance of feature engineering and the potential benefits of more sophisticated preprocessing techniques.

Working directly with the MNIST dataset and implementing the mathematical foundations without relying on pre-built libraries provided a solid understanding of the underlying algorithms that power modern machine learning frameworks.