I initially was unenthusiastic about diving into anything to do with text. With the LLMs doing all kinds of neat things recently, I figured it was time to start exploring.

Setup

Part of the reason I wanted to explore sentiment analysis is because I have many models built in my notebook and I was curious to know how extensible they were to text data. If you open the notebook, you'll see what a mess I created at first, then cleaned up, then added some dataframe manipulation that I was used to. In a funny way I am delighted it took me a bit to figure things out because finding so many roadblocks (no tokenization, no lemmatization, no vectorization...at first) so quickly is what i really enjoy about projects. Fortunately I had some great examples to follow and I was able to blend my own approaches to text classification.

Some key challenges I faced were lack of familiarity with the nltk package, with vectorization, and with text data manipulation. I always want to put things into a dataframe right away!

Methodology

I take a known set of movie reviews from the movie_reviews corpus, download it, and begin to take it apart in search of understanding the review text (what the person wrote about the movie) and the overall rating (category). This particular dataset is an object, which presented problems for my traditional methodology of churning through things.

I extracted the total number of words, categories, unique words, word frequencies, and was able to create the following format for the dataset:

[review words that someone wrote], rating

This was my first breakthrough, and I wasn't expecting it. I next created a feature vector, wrote a function to find features and create feature sets. With these feature sets I used the trusty scikitlearn package to create a SVC (SVM) model, and test its accuracy. I'll talk more about this in the Results section.

Finally, I wanted to compare the SVC to some other classifier models that I'm very familiar with: logistic regression, decision trees, and random forest. My ultimate goal is to use this as a starting point to run pycaret, where I'll be able to look across models easily and compare them together (this piece is not yet done).

Results

When I reduced the vector size to [:20], I was able to achieve an accuracy of 0.51. Boo.

The Coin Flip Problem

Obviously this is pretty poor and I could do well to find a coin I like and flip that the next time I want to use such a small vector.

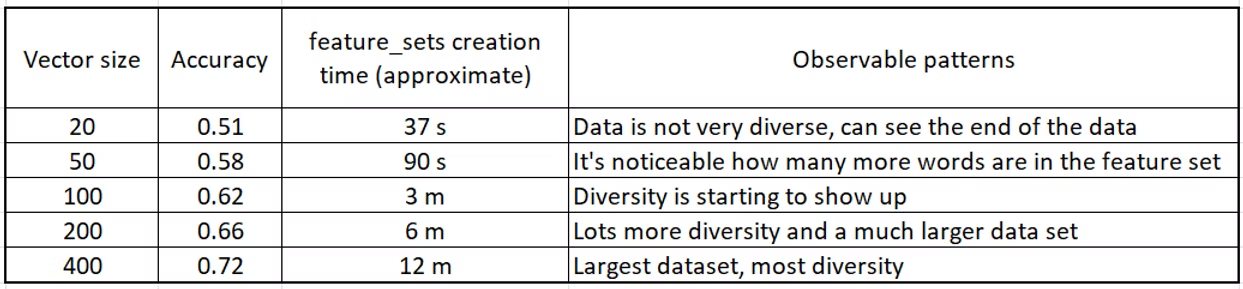

Steadily increasing the vector size based on my own personal tolerance for waiting, I found a personal limit at 12 minutes for this step. That corresponded to a vector size of 400. Given that the total size of the dataset is 2000 reviews with almost 40,000 unique words, only using 1% wasn't going to be acceptable going forward. Some interesting comparisons in the table below:

Not a professional table for inclusion in a paper, but it captures the directionality of key findings

Ok so this is not a professional table for inclusion in a paper, but it does capture the directionality of a few key things. One, that the vector size has a strong correlation to accuracy when the vector size is approximately 1% of the total data. Two, that accuracy can be increased rapidly if a little more patience and processing time can be accommodated. Three, that my limit is 12 minutes per step! I think I need a new computer or I need to learn multi-threading (a project for later). Lastly, under "Observable patterns", I use the term 'diverse' to describe the uniformity of the data I saw. Less diverse = a lot of punctuation and not many words, or the words were very similar. As diversity increases, I saw many more words and different words. Also the data sets grew much larger with a larger vector.

In all, I wanted to improve accuracy if possible, so I turned to another method of utilizing the data: tokenization and lemmatization, combined with vectorization. These things combined help the models interpret the text numerically as a vector, which simplifies (to a degree) the interconnectedness of the patterns one is trying to suss out.

Breakthrough Results

Using vectorization with the tokens and lemmas led to an accuracy of 0.816. Pretty dramatic.

It turns out that I used the tf-idf (term frequency-inverse document frequency) vectorizer which is pretty common. There are several types but this is a common one. I was able to run the other models I referred to earlier and achieved a similar accuracy score for each one (all around 0.82).

It's notable that I did not tune the hyperparameters of any of the models at first. All of the accuracy numbers discussed here are without tuning. The results can improve a bit with tuning for all of the models.

Another main goal of this project is to compare models together to see which one applies to a dataset with this kind of diversity and a dataset of this size. The primary reason is to have a baseline for future projects where I might be dealing with significantly larger amounts of data or data that diversifies in a different way.

It should be noted that I could have created a reasonable approximation of the un-tuned models by sampling from the data and running the first model that way. I did not try that with this project but I've done that on others and have had success.

In the .ipynb file there is some fancification done by way of a confusion matrix and I'll be adding the pycaret section when I have some time.

Overall I have a much greater appreciation for text/language-based models. While there is more to explore, this project gave me a great starting point for more experimentation.